Kubernetes 艰难之路

本文参考 Github 项目 kubernetes-the-hard-way 安装 Kubernetes 集群。

kubernetes-the-hard-way 部署了3台工作节点和3台管理节点,且管理节点不运行 Pod;CNI 网络插件使用 bridge,跨主机之间的容器通信需要人为设置宿主机路由表。

本文部署采用2台 VMware Fusion 的虚拟机,操作系统为 Ubuntu Server 18.04,一台配置为管理节点,另一台配置为工作节点,同时管理节点也为工作节点;CNI 网络插件使用 Calico;并解决无法解析外部域名问题。

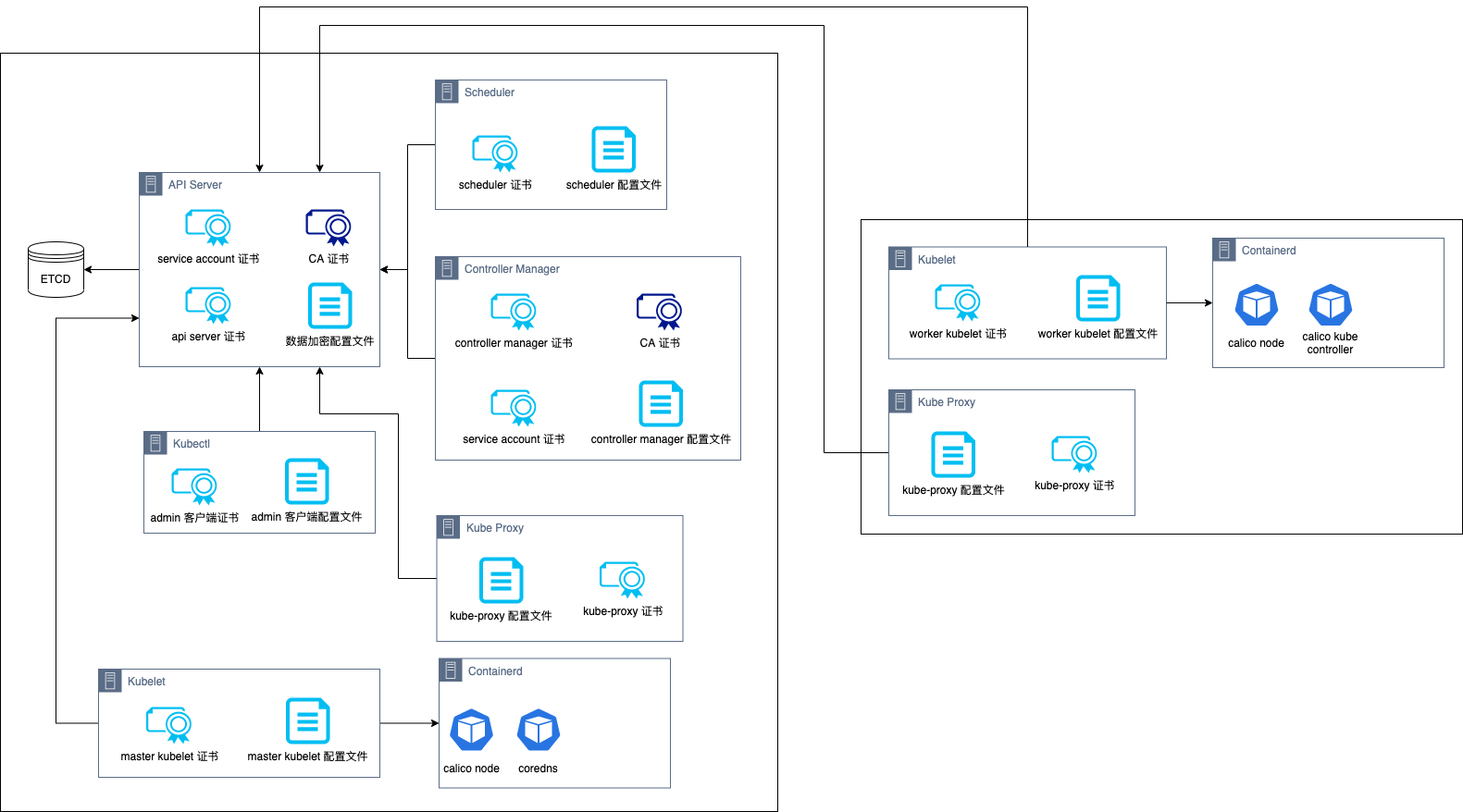

总体架构图:

在 Master 节点安装客户端工具

安装 CFSSL

wget -q --show-progress --https-only --timestamping \

https://storage.googleapis.com/kubernetes-the-hard-way/cfssl/linux/cfssl \

https://storage.googleapis.com/kubernetes-the-hard-way/cfssl/linux/cfssljson

chmod +x cfssl cfssljson && sudo mv cfssl cfssljson /usr/local/bin/

安装 kubectl

wget https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kubectl

chmod +x kubectl && sudo mv kubectl /usr/local/bin/

生成证书

生成 CA 证书

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "CA",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

生成 admin 客户端证书

cat > admin-csr.json <<EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:masters",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare admin

生成2台机器的 kubelet 客户端证书

cat > yangxikun-csr.json <<EOF

{

"CN": "system:node:yangxikun",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=yangxikun,172.16.191.128 \

-profile=kubernetes \

yangxikun-csr.json | cfssljson -bare yangxikun

//-----------------------------------------------//

cat > yangxikun2-csr.json <<EOF

{

"CN": "system:node:yangxikun2",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=yangxikun2,172.16.191.129 \

-profile=kubernetes \

yangxikun2-csr.json | cfssljson -bare yangxikun2

生成 Controller Manager 客户端证书

cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-controller-manager",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

生成 Kube Proxy 证书

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:node-proxier",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxy

生成 Scheduler 证书

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-scheduler",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-scheduler-csr.json | cfssljson -bare kube-scheduler

生成 API Server 证书

KUBERNETES_HOSTNAMES=kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.svc.cluster.local

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=10.32.0.1,172.16.191.128,127.0.0.1,${KUBERNETES_HOSTNAMES} \

-profile=kubernetes \

kubernetes-csr.json | cfssljson -bare kubernetes

生成 Service Account 证书

cat > service-account-csr.json <<EOF

{

"CN": "service-accounts",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

service-account-csr.json | cfssljson -bare service-account

分发证书

scp ca.pem yangxikun2.pem yangxikun2-key.pem yangxikun@172.16.191.129:~/

生成配置文件

生成2台机器的 kubelet 配置文件

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://172.16.191.128:6443 \

--kubeconfig=yangxikun.kubeconfig

kubectl config set-credentials system:node:yangxikun \

--client-certificate=yangxikun.pem \

--client-key=yangxikun-key.pem \

--embed-certs=true \

--kubeconfig=yangxikun.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:yangxikun \

--kubeconfig=yangxikun.kubeconfig

kubectl config use-context default --kubeconfig=yangxikun.kubeconfig

//--------------------------------------------------//

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://172.16.191.128:6443 \

--kubeconfig=yangxikun2.kubeconfig

kubectl config set-credentials system:node:yangxikun2 \

--client-certificate=yangxikun2.pem \

--client-key=yangxikun2-key.pem \

--embed-certs=true \

--kubeconfig=yangxikun2.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:yangxikun2 \

--kubeconfig=yangxikun2.kubeconfig

kubectl config use-context default --kubeconfig=yangxikun2.kubeconfig

生成 kube-proxy 配置文件

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://172.16.191.128:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

生成 Controller Manager 配置文件

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

生成 Scheduler 配置文件

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

生成 admin 客户端配置文件

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfig

分发配置文件

scp yangxikun2.kubeconfig kube-proxy.kubeconfig yangxikun@172.16.191.129:~/

生成数据加密的配置文件和 key

ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

cat > encryption-config.yaml <<EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF

启动 Master 节点

启动 etcd

wget -q --show-progress --https-only --timestamping \

"https://github.com/etcd-io/etcd/releases/download/v3.4.0/etcd-v3.4.0-linux-amd64.tar.gz”

tar -xvf etcd-v3.4.0-linux-amd64.tar.gz

sudo mv etcd-v3.4.0-linux-amd64/etcd* /usr/local/bin/

sudo mkdir -p /etc/etcd /var/lib/etcd

sudo cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

ETCD_NAME=$(hostname -s)

cat <<EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://172.16.191.128:2380 \\

--listen-peer-urls https://172.16.191.128:2380 \\

--listen-client-urls https://172.16.191.128:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://172.16.191.128:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster yangxikun=https://172.16.191.128:2380 \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

sudo systemctl daemon-reload

sudo systemctl enable etcd

sudo systemctl start etcd

sudo ETCDCTL_API=3 etcdctl member list \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem

安装 Master 控制平面组件

sudo mkdir -p /etc/kubernetes/config

wget -q --show-progress --https-only --timestamping \

"https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kube-apiserver" \

"https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kube-controller-manager" \

"https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kube-scheduler" \

"https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kubectl”

chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl

sudo mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

部署 API Server

sudo mkdir -p /var/lib/kubernetes/

sudo mv ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/

cat <<EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=172.16.191.128 \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://172.16.191.128:2379 \\

--event-ttl=1h \\

--encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--kubelet-https=true \\

--runtime-config=api/all \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

配置 Controller Manager

sudo mv kube-controller-manager.kubeconfig /var/lib/kubernetes/

cat <<EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--address=0.0.0.0 \\

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\

--cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\

--kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/var/lib/kubernetes/ca.pem \\

--service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--use-service-account-credentials=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

配置 Scheduler

sudo mv kube-scheduler.kubeconfig /var/lib/kubernetes/

cat <<EOF | sudo tee /etc/kubernetes/config/kube-scheduler.yaml

apiVersion: kubescheduler.config.k8s.io/v1alpha1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig"

leaderElection:

leaderElect: true

EOF

cat <<EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--config=/etc/kubernetes/config/kube-scheduler.yaml \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

启动服务

sudo systemctl daemon-reload

sudo systemctl enable kube-apiserver kube-controller-manager kube-scheduler

sudo systemctl start kube-apiserver kube-controller-manager kube-scheduler

检查系统组件状态

kubectl get componentstatuses --kubeconfig admin.kubeconfig

Kubelet RBAC 授权

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOF

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

启动 Worker 节点

安装依赖

sudo apt-get update

sudo apt-get -y install socat conntrack ipset

sudo swapoff -a // 禁用swap

sudo vim /etc/fstab // 注释掉swap的挂载,防止重启导致swap被启用

安装组件

wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.15.0/crictl-v1.15.0-linux-amd64.tar.gz \

https://github.com/opencontainers/runc/releases/download/v1.0.0-rc9/runc.amd64 \

https://github.com/containernetworking/plugins/releases/download/v0.8.3/cni-plugins-linux-amd64-v0.8.3.tgz \

https://github.com/containerd/containerd/releases/download/v1.2.10/containerd-1.2.10.linux-amd64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kubelet

sudo mkdir -p \

/etc/cni/net.d \

/opt/cni/bin \

/var/lib/kubelet \

/var/lib/kube-proxy \

/var/lib/kubernetes \

/var/run/kubernetes

mkdir containerd

tar -xvf crictl-v1.15.0-linux-amd64.tar.gz

tar -xvf containerd-1.2.10.linux-amd64.tar.gz -C containerd

sudo tar -xvf cni-plugins-linux-amd64-v0.8.3.tgz -C /opt/cni/bin/

sudo mv runc.amd64 runc

chmod +x crictl kubectl kube-proxy kubelet runc

sudo mv crictl kubectl kube-proxy kubelet runc /usr/local/bin/

sudo mv containerd/bin/* /bin/

配置 CNI 网络

cat <<EOF | sudo tee /etc/cni/net.d/99-loopback.conf

{

"cniVersion": "0.3.1",

"name": "lo",

"type": "loopback"

}

EOF

配置 Containerd

sudo mkdir -p /etc/containerd/

cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

EOF

cat <<EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF

配置 kubelet

sudo mv yangxikun2-key.pem yangxikun2.pem /var/lib/kubelet/

sudo mv yangxikun2.kubeconfig /var/lib/kubelet/kubeconfig

sudo mv ca.pem /var/lib/kubernetes/

cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "10.200.0.0/24"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/yangxikun2.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/yangxikun2-key.pem"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

配置 kube-proxy

sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfig

cat <<EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

启动服务

sudo systemctl daemon-reload

sudo systemctl enable containerd kubelet kube-proxy

sudo systemctl start containerd kubelet kube-proxy

配置 Master 节点也能部署 Pod

安装依赖、安装组件、配置CNI网络、配置containerd、配置 kube-proxy、启动服务 与启动 Worker 节点相同。

配置 kubelet

sudo mv yangxikun-key.pem yangxikun.pem /var/lib/kubelet/

sudo mv yangxikun.kubeconfig /var/lib/kubelet/kubeconfig

cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "10.200.1.0/24"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/yangxikun.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/yangxikun-key.pem"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

安装 Calico

修改 /etc/sysctl.conf,将#net.ipv4.ip_forward = 1的注释去掉,开启网卡的数据包转发功能,否则tunl0会丢弃收到的数据包。

下载 YAML 文件

curl https://docs.projectcalico.org/v3.11/manifests/calico.yaml -O

修改 YAML 文件

POD_CIDR="10.200.0.0/16" \

sed -i -e "s?192.168.0.0/16?$POD_CIDR?g” calico.yaml

// 添加命令行参数配置:

// - name: IP_AUTODETECTION_METHOD

// value: "can-reach=172.16.191.128”

安装

kubectl apply -f calico.yaml

检查,在2台机器执行,确保状态都为 Established

sudo calicoctl node status

安装 CoreDNS

下载并修改 YAML 文件

curl -O https://storage.googleapis.com/kubernetes-the-hard-way/coredns.yaml

vim coredns.yaml

// 修改 replicas: 1

// 添加配置,解决外部域名解析问题 forward . /etc/resolv.conf

安装

kubectl apply -f coredns.yaml